I am the CTO of SMSBump and the first software engineer in the company who participated in building the infrastructure and technological vision.

How did we create SMSBump?

As of today, SMSBump is 7 years old, but I want to take you back to the earliest phase of the product’s existence.

In 2015, Mihail Stoychev and Georgi Petrov decided to give a new form to short text messages (SMS) by turning them into a channel for marketing and communication.

The initial goal was to create an easier way for API integration with universal e-commerce platforms. We created SMSBump, a product primarily targeted towards customers with technical knowledge. However, this posed certain limitations, as customers had to make the necessary integration for their business.

It was precisely these limitations that led us to temporarily put the idea on hold until we made the decision that the main goal was to make the product accessible to online stores. This could only be achieved by creating the necessary modules and extensions for each e-commerce platform.

The path to E-commerce platforms

The first integration we created was for the OpenCart platform because it was the primary market for the company, and we already had experience with it. Naturally, this was quite insufficient. The market was very small and specific to that e-commerce platform.

Then the idea arose to integrate with BigCommerce. The platform worked quite similarly to the OpenCart module, with the difference being that it was already a standalone application. The PHP framework we used was Slim Framework, and it was even hosted on a shared server of a well-known Bulgarian hosting company.

Once again, we didn’t achieve success here because the BigCommerce market was primarily focused on enterprise companies, and we weren’t ready to offer the functionalities required by such companies.

The first application for Shopify

In 2016, the Shopify platform gained significant popularity in the online store segment, which prompted us to rethink the entire product. Our goal was to provide a ready-made solution to our users, enabling them to create successful SMS campaigns quickly and easily.

As the first software engineer at SMSBump, I began to see the value behind the idea and dedicated a considerable amount of time to the new version of the product, around 6 months to be precise.

We created the application on a rented server (droplet) in DigitalOcean, which housed a web server, PHP application, background processes, and a database. Even back then, we started using Version Control (Git) for the first time. We used Apache as the web server and MySQL to store the sent messages, and yes, I must emphasize that everything was on a shared server. Whether it was due to lack of experience or the need for quick decision-making, at that time, it seemed right to us.

The first Black Friday

I would describe it as truly ‘black’ because we didn’t handle it well.

Although the product had limited features, just one was enough to attract our first users: automated reminder messages for abandoned carts. None of us expected such interest. Our server was running at 100% capacity, and we couldn’t send all the campaigns. I would even say that we managed to send no more than 10-20 campaigns.

On one hand, it was our first major failure, but on the other hand, it signaled to us that the idea behind the product was good, but we needed to invest heavily in stability and speed.

Separation of the Database from the main server

After the failure of Black Friday, we hired an additional server dedicated solely to the database. It stored absolutely everything about our customers: marketing campaigns, sent messages, users, prices, and more. We started separating different major functionalities into individual servers, with the code being exactly the same for all of them, except for running different processes required for their purpose.

At that time, I inevitably became a system administrator who had to manually orchestrate each server, make necessary configurations, and restart various processes when code changes occurred. We had five different servers that had no automation, required constant maintenance, and ultimately slowed down the development of new features. It was far from optimal because we had to simultaneously write new functionalities and manually maintain all the servers. And it was still just me and Misha.

That’s when we realized that we needed more people because, as a programmer and system administrator, I couldn’t keep up with everything. Evgeni Kolev joined our team, who is currently a Team Manager in the company and one of our most experienced engineers.

In terms of technologies, at that time, we used HaProxy for load balancing web requests and a shared Redis server that ensured consistency of user sessions across all web servers. We had shared disk space for static resources, which was mounted to each server.

Everything seemed fine until the next Black Friday arrived, reminding us once again that we were still not where we wanted to be. We sent out many campaigns, but there were some that we couldn’t process. We lacked fundamental concepts such as automatic scaling, monitoring, and proper distribution of campaigns.

The most interesting part was that during Black Friday, we manually selected which campaigns to send, routed them through code changes, and restarted processes to process the new campaigns. I can say it was a stressful day but also a valuable lesson that showed us how to prioritize campaigns correctly in relation to each other.

Divide and conquer

Despite most components being separated, there were still many operations that burdened the main database. We started to separate platform-related information into another database, as we introduced functionality such as segmentation of phone subscribers, which itself was a resource-intensive process.

We created replicas of the databases because the marketing campaigns were becoming larger and larger. We still managed them entirely manually, and even the backups were done quite primitively, as we wrote scripts ourselves that performed daily mysqldump of the databases onto disk for data storage.

We didn’t invest much in infrastructure. Orchestration, containerization, and the like were not part of our development plans. Docker and Kubernetes weren’t even on our radar. Our main goal was to compete with the market and be leaders in Shopify as a marketing platform for sending text messages with high returns for our clients. Our focus was on rapidly delivering functionality and making things visible to end-users because that’s what sold.

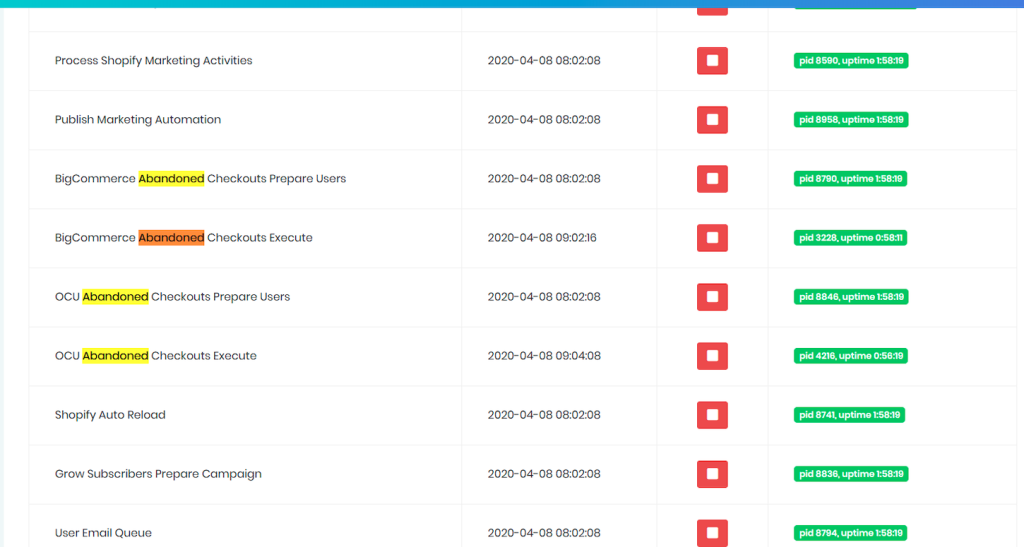

Supervisor was the primary process manager for all servers, and we still managed it manually. We created a panel that eliminated the need to SSH into each server every time to see what was happening, which processes were running, and which were not. From there, we could restart processes and monitor the status of each service.

The logs from all servers and processes were also located there. We used rsyslog to centralize them.

The first successful Black Friday

In 2019, we had our first successful Black Friday. We had grown to 12 employees, with 6 of them being technical personnel, and the rest handling marketing and customer service.

We were already aware that we would experience high traffic and were prepared for it. The marketing team had prepared plenty of materials on how customers should properly set up their campaigns and provided guidance on launching them. The customer service team was available 24/7, with a response time of under 5 minutes. The technical staff had prepped all systems in advance, allocating more resources to our servers. We organized ourselves to be present in the office and created a schedule assigning responsibilities and shifts. For instance, I was responsible for stability and monitoring, while someone else handled customer inquiries related to functionality.

The Path to Yotpo and New Architecture

At the beginning of 2020, a completely new chapter opened for SMSBump as the company was acquired by another marketing platform – Yotpo.

The merger with Yotpo brought forth many new ideas that we wanted to implement in SMSBump, as we now had the security of a larger company and could afford to build the architecture we desired. We started gradually migrating to Amazon Web Services (AWS). We hired a DevOps team to lay the foundations of containerization with Docker. We embraced the concept of Infrastructure as Code using Terraform, and finally introduced the capability for automatic scaling of various services.

Naturally, this was not an easy task. It took us about 6 months to migrate from DigitalOcean to AWS. We couldn’t simply move everything from one place to another because we had already decided to adhere to industry conventions and make use of the latest technologies. There was a lot of code refactoring involved to make the services work in the new way.

During the migration process, we made extensive use of AWS services, which reduced the infrastructure maintenance on our side. Examples of such services include ECS, Fargate, EventBridge, SQS, OpenSearch (ElasticSearch), RDS MySQL, ElastiCache, and more.

Utilizing serverless services like Lambda and API Gateway provided us with greater assurance that we didn’t need to worry about hardware, allowing us to focus primarily on building the code itself.

Where are we now?

Two years after the acquisition, SMSBump solidified its market position and managed to maintain itself as the top platform for sending short text messages.

The technology stack remains unchanged. 90% of the SMS product is built on PHP, with the main version being 7.4, but we are gradually migrating to PHP 8. The primary PHP framework we use is CakePHP.

We have several smaller serverless applications built on Node.js. The front-end is entirely developed in ReactJS.

As for databases, we utilize MySQL RDS, Aurora MySQL, OpenSearch (ElasticSearch), ElastiCache (Redis), and DynamoDB.

For monitoring and tracing, we have integrations with Grafana, Prometheus, New Relic, and AWS CloudWatch.

We are in the process of breaking down a significant portion of the monolithic application into various microservices, allowing us to domainize different R&D teams within the company. This facilitates autonomy in each team and grants the freedom for each of us to implement our features without relying on other teams.

We actively write Unit, Component, Integration, and E2E tests, ensuring increased reliability when deploying new code for our users.

We have started exploring the transition from ECS to Kubernetes as an orchestrator, as it will provide us with more monitoring capabilities and easier integration with other Yotpo products.

Final words

I am often asked if, with the experience I have today, I would do something differently in the past. Honestly, I wouldn’t, because that mindset helped us quickly create a product that immediately provided value to our users. Delving too much into building the perfect infrastructure would have set us back, and we would have had a perfect infrastructure but few customers.

Although we are now close to 100 people at SMSBump, I’m glad that the company’s DNA remains the same, and we have the same motivation as before.

If you find yourself in this story, wanting to be part of a dynamic environment and innovate together, join Yotpo SMSBump.